we have prepared the following guide for creators. Of course, as the model continues to be iterated and explored, this guide will also continue to be updated.

We hope to improve it together with all creators. If you find any tips for a better model, please feel free to contact us.

Together, we can refine the guide for taming the AI and help everyone create more and better quality works.

Text to video

Enter a piece of text, and large language model will generate a 5s or 10s video based on the text, turning the text into a video. It now supports two generation modes: “Standard Mode” and “Professional Mode”.

Standard Mode generates faster, and Professional Mode has better picture quality. Most AI video generator also supports three aspect ratios: 16:9, 9:16 and 1:1, to meet your video creation needs more diversely.

We know that “Prompt”, as the main interactive language of the Text to video large language model, will directly determine the content of the video returned by the model. Therefore, how to use effective Prompt to complete AI video creation is what every creator wants to understand and learn. As the new life of the AI video large language model, AI is still being iterated and updated. We need to continue to explore and give full play to the potential of these model.

so that we can better play with AI videos. We have prepared the AI video generator prompt formula for your reference:

Prompt word = Main body (description of the main body) + Movement + Scene (description of the scene) + (cinematography + lighting + atmosphere)

—the content in brackets is optional

Main body: The main body is the main object of expression in the video and an important embodiment of the theme of the image. Examples include people, animals, plants, and objects;

Subject description: A description of the subject’s appearance and posture, which can be listed in multiple short sentences. For example, movement, hairstyle and hair color, clothing, facial features, posture, etc.;

Subject movement: A description of the subject’s movement, including stillness and movement. The movement should not be too complicated, and should be able to be shown in a 5-second video;

Scene: The scene is the environment in which the subject is located, including the foreground and background;

Scene description: A detailed description of the subject’s environment, which can be listed in multiple short sentences, but not too many, as long as it fits within the 5-second video. Examples include indoor scenes, outdoor scenes, and natural scenes.

Camera language: This refers to the use of various camera applications and the connection and switching between them to convey a story or message and create a specific visual effect and emotional atmosphere. Examples include super wide-angle shots, bokeh, close-ups,

telephoto lens shots, ground shots, top shots, aerial shots, depth of field, etc.; (Note: this is distinguished from camera movement control)

Light and shadow: Light and shadow are key elements that give a photographic work its soul. The use of light and shadow can make a photo more profound and more emotional. We can create works with a sense of layering and emotional expressiveness through light and shadow. Examples include ambient light

shooting, morning light, sunset, light and shadow, the Dunninger effect, lighting, etc.;

Atmosphere: A description of the atmosphere of the intended video scene. For example, a lively scene, cinematic coloring, warm and beautiful, etc.

The core components of the above formula are the subject, movement and scene, which are also the simplest and most basic units for describing a video scene. When we want to describe the subject and scene in more detail, we only need to list multiple descriptive phrases, maintaining the completeness of the desired elements in the Prompt. AI will then expand the prompt words based on our expression to generate a video that meets expectations.

For example, if we want to describe a scene of a panda reading a book in a coffee shop, we can add more details about the subject and the scene: “A panda wearing black-framed glasses is reading a book in a coffee shop. The book is on the table, along with a cup of steaming coffee. Next to it is the window of the coffee shop.” This way, the video generated by AI will be more specific and controllable.

If you want to add some camera language and lighting atmosphere, you can also try “shot from medium close-up, background blurred, mood lighting, a panda wearing black-framed glasses reading in a coffee shop, the book on the table, a cup of coffee on the table, steaming, next to the window of the coffee shop, cinematic grading”.

| prompt |

A panda reading in a coffee shop

A panda wearing black-framed glasses is reading in a coffee shop. The book is on the table, along with a cup of steaming coffee. Next to it is a window of the coffee shop.

The shot is taken from a medium distance, with a blurred background and atmospheric lighting. A panda wearing black-framed glasses is reading in a coffee shop. The book is on the table, along with a cup of steaming coffee. Next to it is a window of the coffee shop. Cinematic coloring

| video |

The significance of the film grading formula is to help you better describe the video image you want. We can also use our imagination to communicate with AI freely and boldly without being limited by the formula, and there may be even more surprising results! Here are some high-quality examples shared by creators, let’s take a look~

Some high-quality examples

The following video examples are shared by creators

| video |

| prompt | Creative description: A panda is eating hotpot with chopsticks in the background is the streetVideo ratio: 16:9Generate mode: Professional ModeDuration: 5s | Creative description: A Pikachu sitting on a chairVideo ratio: 16:9Generate mode: Professional ModeDuration: 5s | Creative description: A polar bear playing the violin in the snowVideo ratio: 16:9Generate mode: Standard ModeDuration: 5s | Creative description: A bee with a puppy’s headVideo ratio: 16:9Generate mode: Professional ModeDuration: 5s |

| video |

| prompt | Creative description: Morning mist, sunrise, lens flare, cool breeze, a young Chinese woman with delicate features, long hair blown about by the wind, strands of hair drifting across her face, wearing a summer dress, beach at the seaside in the backgroundVideo ratio: 16:9Generate mode: Standard ModeDuration: 5s | Creative description: Indoor shot, close-up, a Chinese child eating dumplingsVideo ratio: 16:9Generate mode: Standard ModeDuration: 5s | Creative description: A beautiful Chinese-style girlVideo ratio: 16:9Generate mode: Professional ModeDuration: 10s | Creative description: A Chinese girl holding a pink balloon smiling happily at the playground, with a slide in the backgroundVideo ratio: 16:9Generate mode: Professional ModeDuration: 10s |

| video |

| prompt | Creative description: A bird’s-eye view shot of blue waves crashing against the rocks, the scene is magnificent and grandVideo ratio: 16:9Generate mode: Professional ModeDuration: 10s | Creative description: A medieval sailing ship sailing on the sea, a misty night, the bright moonlight creates a strange atmosphereVideo ratio: 16:9Generate mode: Professional ModeDuration: 5s | Creative description: First-person perspective, high-speed flight, symmetrical composition, rotation, dark clouds with countless bolts of lightning, motion blurVideo ratio: 16:9Generate mode: Standard ModeDuration: 5s | Creative description: The camera zooms into the beacon tower on the Great Wall. First-person perspective, high-speed flight, symmetrical composition, motion blur, atmospheric lightingVideo ratio: 16:9Generate mode: Professional ModeDuration: 5s |

| video |

| prompt | Creative description: A space fighter speeds through a huge sci-fi interior passage, bursts out of the passage and flies into space. At the end of the passage, you can see a space battleVideo ratio: 16:9Generate mode: Professional ModeDuration: 10s | Creative description: A racing car speeds across the surface of the moon, with a space background and a zoom shiftVideo ratio: 16:9Generate mode: Standard ModeDuration: 5s | Creative description: A bird’s-eye view of a cyberpunk cityVideo ratio: 16:9Generate mode: Professional ModeDuration: 10s | Creative description: A cyberpunk cityscape on an alien planet, with futuristic buildings. The camera slowly moves forward, and there are pedestrians on the streetVideo ratio: 16:9Generate mode: Standard ModeDuration: 5s |

| video |

| prompt | Creative description: A woman in an alley having a gunfight with someone. Blade Runner style, neon, ambient lightingVideo ratio: 16:9Generate mode: Standard ModeDuration: 5s | Creative description: first-person perspective, a man driving a car on a night street, with fireworks blooming in front of himVideo ratio: 16:9Generate mode: Professional ModeDuration: 5s | Creative description: a panning shot of a handsome young man in ancient costume, dressed in white, sitting by a pond, closing his eyes to restVideo ratio: 16:9Generate mode: Standard ModeDuration: 5s | Creative description: a woman’s back, wearing a red long shirt, standing on the roof. The buildings in the distance are smokingVideo ratio: 16:9Generate mode: Professional ModeDuration: 5s |

Some usage tips

Try to use simple words and sentence structures, and avoid using overly complex language.

The content of the picture should be as simple as possible, and can be completed in 5s to 10s;

using words such as “Oriental artistic conception, China, Asia” can more easily generate a Chinese style and Chinese people;

the current video large language model is not sensitive to numbers, for example, “10 puppies on the beach”, the quantity is difficult to keep consistent;

for split-screen scenes, you can use the prompt: “4 camera angles, spring, summer, autumn and winter”;

At this stage, it is difficult to generate complex physical movements, such as the bouncing of balls and high-altitude throws.

Image to video

Input an image, and the large language model will generate a 5s or 10s video based on the image understanding, turning the image into a video frame. Input an image and a text description, and the large language model will generate a video from the image based on the text expression.

Two generation modes, “Standard Mode” and “Professional Mode”, are now supported, as well as three aspect ratios, 16:9, 9:16 and 1:1, to meet the diverse video creation needs of everyone.

Image to video is currently the most frequently used feature by creators. This is because from a video creation perspective, image to video is more controllable. Creators can use pre-generated images to generate dynamic videos, which greatly reduces the cost and threshold of creating professional videos.

From a video creative perspective, AI provides users with an alternative creative platform. Users can control the movement of the subject in the image through text. For example, the recent online viral videos “Old photos come to life” and “Hug your younger self”,

and the “mushroom turning into a penguin” video, which has been dubbed the “mushroom hallucination video” by netizens, which shows the attributes of AI as a creative tool and provides users with endless possibilities for realizing their creativity.

For Image to video, controlling the movement of the subject in the image is the core, and we provide the following formula for reference:

Prompt word = Subject + Movement, Background + Movement · · · ·

Subject: the subject in the picture, such as a person, animal, or object;

Movement: the trajectory of movement that the subject wants to achieve;

Background: the background in the picture.

The core components of the above formula are the subject and the movement. Unlike Text to video, Image to video already has a scene, so you only need to describe the subject in the image and the movement you want the subject to achieve. If multiple movements of multiple subjects are involved, just list them in order. AI will expand the prompt words based on our expression and understanding of the image to generate a video that meets expectations.

If you want to “make the Mona Lisa in the painting wear sunglasses”, when we only input “wear sunglasses”, the model will find it difficult to understand the command, so it is more likely to generate the video based on its own judgment.

When AI judges that this is a painting, it is more likely to generate a picture frame exhibition with the effect of camera movement. This is also the reason why still videos are easily generated from photos.

Therefore, we need to describe the “subject + movement” to make the model understand the command, such as “Mona Lisa puts on sunglasses with her hand”, or for multiple subjects “Mona Lisa puts on sunglasses with her hand, and a ray of light appears in the background”. The model will respond more easily.

| prompt |

Wear sunglasses

Mona Lisa puts on sunglasses with her hand

Mona Lisa puts on sunglasses with her hand, and a ray of light appears in the background

| video |

Again, the meaning of the formula is to help you better use the Image to video capability and improve the video drawing rate. More creative ideas need to be explored together, so feel free to communicate with AI!

Here are some high-quality examples shared by creators, let’s take a look~

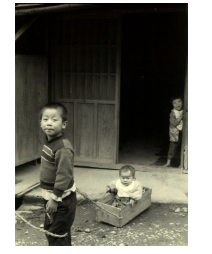

| video |

| prompt and Image input |

Two people hugging

Two boys hugging

A little boy smiling at the camera

A beautiful Chinese girl looking into the distance and smiling

| video |

| prompt and Image input |

A cat kneading dough in the kitchen

A red-crowned crane is looking for food

| video |

| prompt and Image input |

A model is smiling, with the wind blowing her hair

A giant panda is eating an apple

An athlete is riding a bicycle on the road, creating a sense of speed

Flying dust and fluttering clothes

Some tips

Try to use simple words and sentence structures, and avoid using overly complex language.

Movement obeys the laws of physics, so try to describe it using movements that are likely to occur in the picture.

Descriptions that differ greatly from the picture may cause the camera to switch;

it is difficult to generate complex physical movements such as bouncing balls and high throws at this stage;

Video extension

The AI-generated video can be extended by 4 to 5 seconds, and multiple extensions (up to 3 minutes) are supported. The video extension creation can be done by fine-tuning the prompt.

The video extension function is located in the lower left corner of the video after it is generated. There are two modes: “Automatic Extension” and “Custom Creative Extension”. “Automatic Extension” means that there is no need to enter a prompt, and the model will extend the video based on its understanding of the video itself.

“Custom creative extension” means that the user can control the extended video through text. Here, the prompt needs to be related to the original video, and the ‘subject + movement’ of the original video should be specified, so that the extended video will not collapse as much as possible. We provide the following formula for your reference

Prompt = Subject + Movement

Subject: Refers to the subject in the uploaded picture that you want to move. To ensure better text responsiveness, it is better to choose a subject with a good effect;

Movement: refers to the trajectory of the movement that the target subject wishes to achieve.

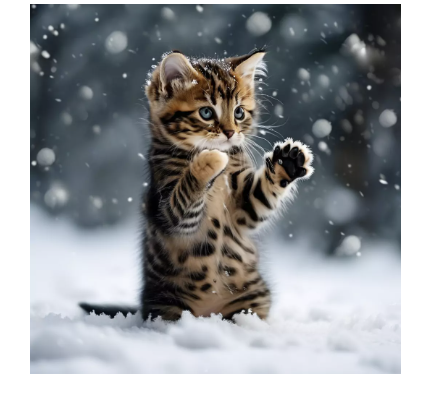

| prompt and Image input |

Continuation x1: A woman stands in the snow, lifts her right hand and touches the brim of her hat

Continuation x2: The woman lowers her hand and looks into the distance s

Prompt description: A kitten walks in the snow

Prompt description: The mushrooms in the plate turn into a group of penguins that climb out and walk in the snow

Prompt description: Many puppies climb out of the box

Prompt description: First-person perspective, racing car game, holding the steering wheel of a sports car, speeding on the highway

Some tips

In the video “Custom Creative Extension”, the prompt needs to be consistent with the original video subject. Unrelated text may cause the camera to switch;

there is a certain probability of extension, and it may be necessary to extend multiple times to generate a video that meets expectations;

Advanced features

Standard Mode and Professional Mode

“Standard Mode” is a model that generates videos faster and incurs lower inference costs. You can use Standard Mode to quickly verify the model’s results and meet your creative needs. “Professional Mode” is a model that generates richer video details and incurs higher inference costs. You can use Professional Mode to generate high-quality videos and meet the needs of creators who want to create high-end works.

Standard Mode and Professional Mode each have the following advantages, and you can choose a model to generate videos based on your needs:

Standard Mode: low cost for video generation. Good at generating portraits, animals, and scenes with large dynamic ranges. The generated animals are more endearing, the picture is toned softly, and it is also a model that received praise when some AI video generator was first released.

Professional Mode: high-quality video generation. Good at generating portraits, animals, architecture, landscapes, and other videos. The details are richer, the composition and tone are more advanced, and it is the model that AI uses most for fine video creation at this stage.

input

PrStandard Mode

Professional Mode:

conclusion

Prompt: A giant panda playing guitar by the lake

Standard Mode:The giant panda has natural movements and a cute appearance, and the video has a soft color tone;

Professional Mode:The guitar has richer details, the scene has improved imagination, and the camera movement is more stable.

Prompt: A medieval sailing ship sailing on the sea, a misty night, bright moonlight

Standard Mode:The movements in the picture are natural, the details are not obvious, and the video has a soft color tone;

Professional Mode:The picture is rich in detail, the sails follow the laws of physics, the picture has a cinematic feel, and the camera movement is more stable.

Camera movement control

Camera movement control now supports 6 basic camera movements, including “pan, tilt, zoom in/out, vertical pan, rotating pan, and horizontal pan”, as well as 4 master camera movements, including “left rotation, right rotation, zoom up, and zoom out”, to help creators generate video footage with obvious camera movement effects.

Camera movement control is a type of camera language. To meet the diversity of video creation and allow models to better respond to creators’ control of the camera, the platform adds the function of camera movement control, which controls the camera movement behavior of the video screen with absolute commands. The range of camera movement can be selected by adjusting the displacement parameters. The following are examples of different camera movements for “A panda playing the piano by the lake”:

pan

tilt

zoom in/out

vertical pan

rotating pan

horizontal pan

left rotation

right rotation

zoom up

zoom out

First and last frame capability

The first and last frame function, that is, uploading two pictures, the model will use these two pictures as the first and last frames to generate a video. You can experience this by clicking “Add last frame” in the upper right corner of the “Image to video” function.

The first and last frame function can achieve more precise control over the video. At this stage, it is mainly used in video creation to generate videos with control requirements for the first and last frames. It can better achieve the expected dynamic transition of the generated video. However, it should be noted that the content of the first and last frames of the video needs to be as similar as possible. If the difference is large, it will cause a camera switch.

First frame image

last frame image

video

Some tips

choose two pictures with the same theme and similar content as much as possible, so that the model can be smoothly connected within 5 seconds.

If the two pictures are very different, it may trigger a camera cut. Many creators will use image generation to select similar pictures, and then use the first and last frame capabilities to generate videos. For example